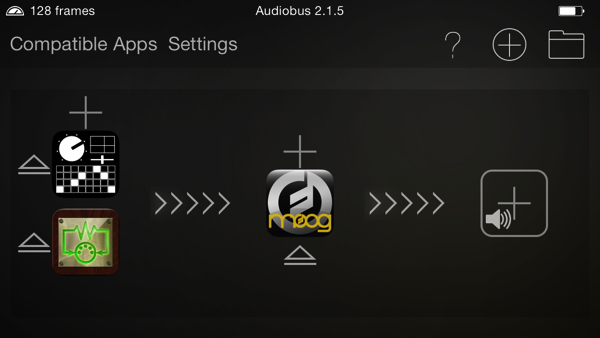

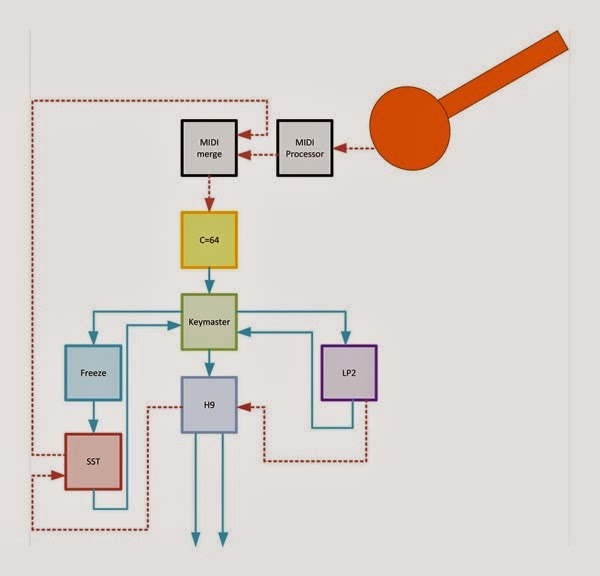

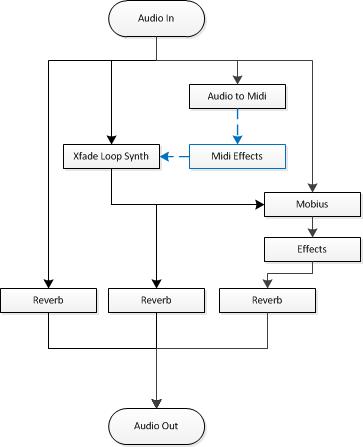

As you might expect if you've been following along for a while, the signal path is complicated. I made a graphical representation to better communicate it (description follows).

|

| Signal path is complex, but colour-coded: green is MIDI, red is control voltage and the other colours represents different audio signal paths. |

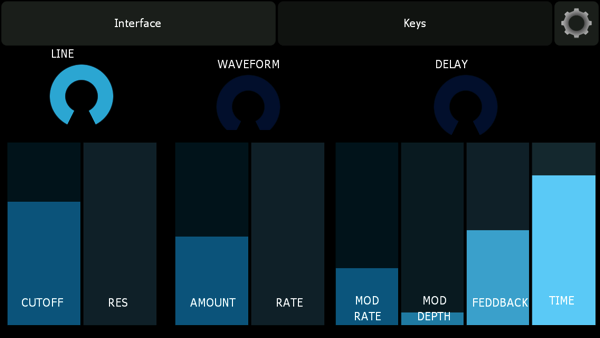

Audio signal path

The audio path starts with the YSL-697Z Professional Trombone into my trusty Audio-Technica cardioid condenser clip-on microphone. I have been using this microphone for a while and I have never felt the need to look elsewhere in over a hundred gigs. The mic is plugged directly into the Apogee Duet, which provides it with the required phantom power and pre-amplification. The Duet has two inputs and four outputs and I use all but one of the inputs. The Duet provides the interface to my Pure Data patches running on an iPhone 5 through an app with the unlikely name of MobMuPlat.Once processed in Pure Data, the audio takes three different paths:

First, a stereo signal is sent to the Meris Ottobit Jr. Its outputs are sent to a pair of mono TC Electronic Ditto loopers modified to accept 5v control voltage in lieu of footswitch presses (more on this here). The outputs of the two Ditto loopers are sent to two audio summers (left and right).

Second, the left channel is sent through the Moog Minifooger Ring to the Montreal Assembly Count to 5 and then to a EHX Freeze and the Meris Polymoon. The Polymoon’s outputs are sent to two audio summers (left and right)

Third, the right channel is sent to the EHX Superego and Pitch Fork. The signal then goes to the Abattoir (one of my original creations) and the Moog Minifooger Drive before reaching the Meris Mercury7 reverb. The Mercury7`s outputs are also sent to two audio summers (left and right).

The outputs of the two audio summers are sent through a Radial ProD2 to a pair of ZLX-12P powered speakers. To round out the audio path, the Organelle’s outputs are also sent to the two ZLX-12P through a second ProD2. The Organelle provides percussion by running a Pure Data patch that includes my external based on the code for Mutable Instruments' Grids module.

MIDI signal path

The MIDI signal path starts with two controllers, the KMI 12 Step and the original Trigger Finger from M-Audio, that are sent to the Apogee Duet through a MIDI Solutions Merger and a Roland UM-ONE MIDI USB interface. Trombone notes are also converted to MIDI signals on the iPhone 5 by using the MIDImorphosis app. Most of this MIDI data is consumed in my Pure Data patch, but some is filtered and sent out to control parameters on other devices. After the MIDI out, the first in the chain is the TEMPODE which merges tap-generated MIDI clock to the control information it receives. Next in the chain, we have a MIDI Solutions Quadra Thru that buffers the signal and splits it to the Organelle, through a second UM-ONE, and to two DIY MIDI modules: a DIY MIDIBox and my Ditto loopers controller. MIDI signals are routed from the DIY MIDIBox to all three Meris pedals.Control voltage signal path

There are two distinct control voltage (CV) signal path. The first starts with the Patterner, my original creation that replaces an expression pedal anywhere you would normally use one. The Patterner's output is sent to the expression pedal input of the Copilot FX Bandwitdh, which outputs three 0-5v CV signals based on its expression pedal input. These three outputs are used to modulate the Pitch Fork and the Ring I'm considering routing the third CV output to the Count to 5 as I've learned that it can accept control voltage in the 0-3v range.The second CV path is generated from my DIY Ditto controller that sends out 5v gates to trigger the looper's "footswitch" functions based on incoming MIDI control signals.